Does Automation in Data Modeling make sense?

Humans like to automate repetitive tasks. From factory floors to our homes, technology continues to replace manual labor with robotic precision. We make cars on assembly lines, mow our lawns, and vacuum our floors using machines designed to tirelessly deliver consistent quality and output, unburdened by fatigue or boredom.

Automation isn't just about saving time; it's about elevating efficiency, reliability, and quality.

Automation extends for menial tasks far beyond physical labor. Consider the aviation industry, where autopilot manages almost 90% of a typical commercial flight. Similarly, data engineers recognize that automation tools can efficiency in building and maintaining data warehouses. While workflow and transformation automation are widely accepted, the potential for automating data modeling is still surprising. Traditionally viewed as a task demanding deep analysis, collaboration, and human expertise, data modeling is in fact ripe for automation, as we'll explore.

Lightening the heavy load of data modeling

Data modeling typically begins when you create a conceptual data model. Normally this would be a collaborative effort involving data architects, data modelers, data engineers, business analysts, and business stakeholders to understand the organization's requirements and accurately reflect business goals and processes. Upon successful validation by the business, this conceptual model is restructured into a logical one. This includes all the details about the entities, attributes, relationships, and more. Data engineers need to demonstrate communication, analytical thinking, model design, and SQL skills to master these steps. Tools like ERwin, MS Visio or Toad help with the documentation but do not provide automation.

Bridging the gap: logical to physical data modeling

Here, the magic happens when translating the logical model into the physical database schema. This involves defining table structures, attributes, data types, and generating the necessary SQL code – a time-consuming and error-prone process.

Agile Data Engine automates rote data warehousing tasks

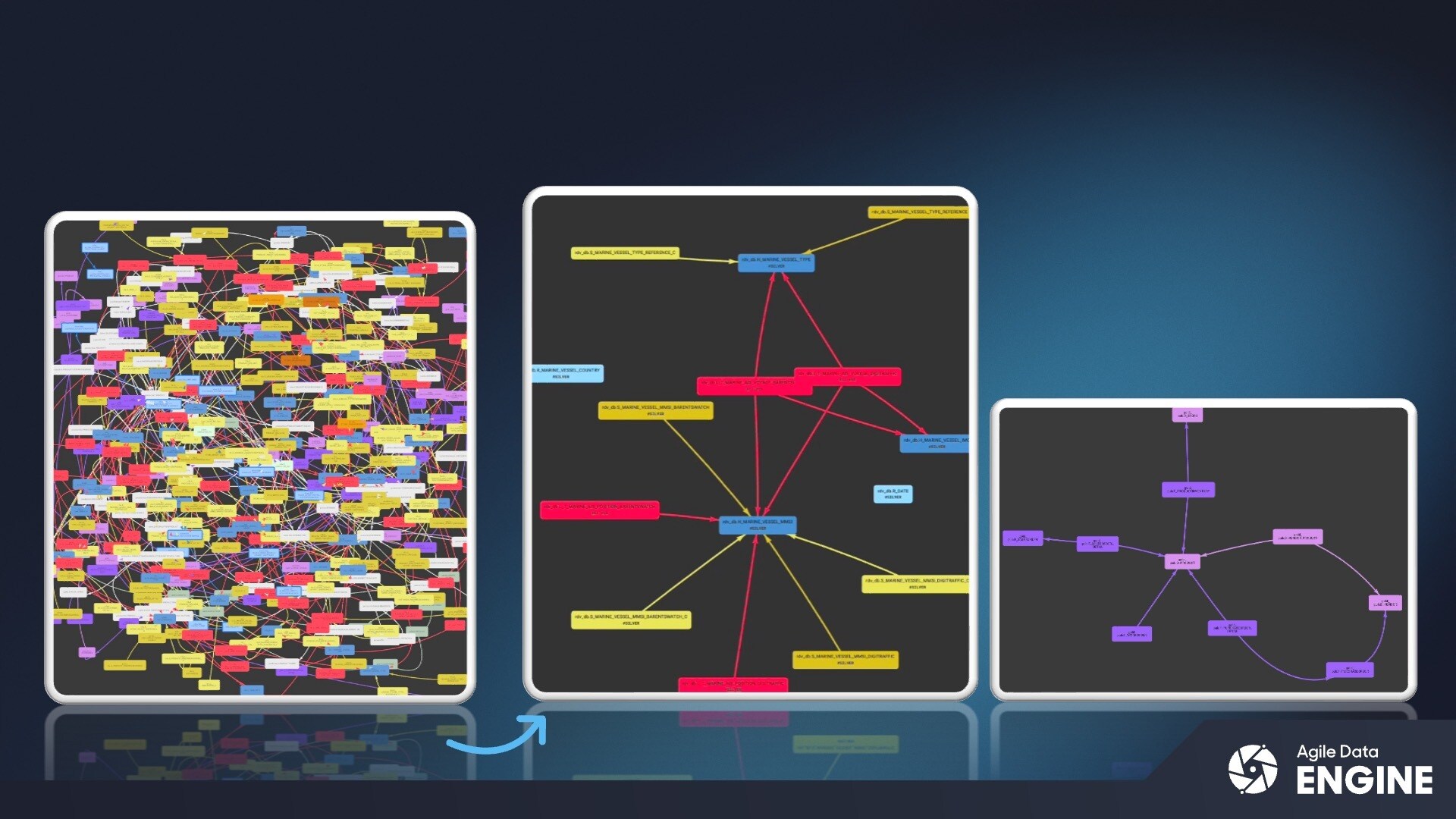

By generating SQL and Python code and ensuring consistency between the logical and physical models, Agile Data Engine expands your team's strategic capabilities through automation. It eliminates manual coding errors, accelerates development, and simplifies maintenance. Moreover, changes to the logical model can be instantaneously reflected in the physical database. Let's dive into what this means:

- Automatic object and attribute creation: Specific tables or views require consistent, pre-defined sets of attributes. Engineers don’t have to remember them and most importantly don’t have to code them. Templates automatically insert them and create the SQL code accordingly. For example: Data Vault tables require a specific set of mandatory attributes and a consistent way of handling them, such as management of data vault keys, business keys, time attributes, source load-related metadata, delta loads tracking information, etc.

- Uniform transformations: The key to successful data warehouse automation lies in leveraging reusable and uniform transformations. Once they have been defined, they can be applied across the entire data warehouse. These components not only enhance consistency and reduce redundancy, but also simplify maintenance and updates. The means changes propagate automatically across all instances, saving time and ensuring consistent improvements and fixes throughout the data warehouse.

- Continuous Integration and Delivery (CI/CD): CI/CD ensures that data models are consistently promoted, tested, validated, and deployed in sync with application code. New code is automatically generated and seamlessly merged with existing code and checked for consistency, facilitating the continuous delivery of data solutions. This approach enables rapid iteration and reliable deployments. Automated testing capabilities rigorously validate data models, ensuring that changes do not introduce errors or inconsistencies.

- Naming conventions: Standards are important for guiding development, knowledge transfer and clarity. This uniformity not only reduces errors but also enhances data quality and reliability. Thanks to pre-configured rules, automation can rigorously apply these when creating database objects. For example, the name of a Data Vault hub entity should always start with a ‘H_’. These rules apply all the way down to the attributes (for example all hubs will store the business keys in DV_BUSINESS_KEY column and if the keys are constructed by multiple values, they will be separated by a tilde sign).

- Error-checking and validation: Manual data modeling is prone to human error, whether in defining relationships, setting data types, or applying constraints. Automated validations can identify inconsistencies and problems early on.

- Simplified changes: Certain changes, while not overly complex, can sometimes take a lot of time and require intense focus. Common example are data type changes and moving an attribute's position. It's easy to make a wrong step which would require a complete and costly reload of data. Automation helps avoid these by generating precise code that handles database schema changes and preserves data.

- Synchronized logical and the physical models: Ideally, you keep the logical and the physical models in sync. Lack thereof can lead to gaps in documentation, wasted time, and modeling issues. Things can get messy when you attempt to keep track of the deployment status. Agile Data Engine captures the logical model and translates it into a physical model. Automated sync includes not only objects definitions but also the load logic and the deployment history.

- Auto-documentation: Automation can generate comprehensive documentation, making it easier for team members to understand and work with the models, i.e. data engineers don’t have to worry about updating documentation in various spots.

Robust data modeling: the cornerstone for data warehousing success

A robust data model is the cornerstone of any successful data warehouse. It underpins data quality, consistency, and the ability to derive meaningful insights. To maximize the value of this critical asset, data engineers need to focus on the strategic aspects of data modeling without the distraction of repetitive tasks.

Automation is the key to unlocking this potential. By automating mundane and time-consuming processes, data engineers can dedicate more time to crafting innovative solutions, ensuring data quality, and aligning with evolving business needs. This strategic shift empowers organizations to adapt swiftly to changing market dynamics and extract maximum value from their data. While dedicated modeling tools may excel in creating detailed logical models, Agile Data Engine excels at bridging the gap between design and implementation.

Want to learn more? Take a look at the following demo of Agile Data Engine to get sense for the power of automation in data modeling. Please contact us if you want to see more.