Data Vault Automation

Accelerate resilient data warehouse development with automated Data Vault modeling and standardized transformations—delivering the agility, traceability, and scalability Data Vault methodology was designed for. Agile Data Engine was developed with input from experienced Data Vault professionals and is fully aligned with Data Vault 2.0 principles.

Why choose Agile Data Engine for Data Vault?

Up to 80% faster Data Vault implementation

Native support for all modern cloud database platforms

Compliance-ready metadata and audit trail

Designed by experts, built for enterprise scale

Become truly agile with Data Vault and Agile Data Engine

Accelerate data vault implementation by up to 80%

While powerful, Data Vault can be time-consuming to implement manually. ADE automates both data modeling and transformation logic—cutting development time by up to 80%. This allows your team to focus on delivering insights instead of building infrastructure.

Ideal for regulated industries

With a metadata-driven approach and full audit-trail capabilities, Data Vault together with Agile Data Engine is especially well-suited for regulated sectors like finance and healthcare. It helps you meet compliance requirements without compromising agility.

Built for scale and resilience

Agile Data Engine supports both horizontal scalability (more source integrations) and vertical scalability (large data volumes), helping you build a resilient, future-proof Data Vault architecture from the ground up.

How It Works: Practical capabilities for Data Vault automation

Agile Data Engine delivers full-lifecycle automation for your Data Vault environment:

Design conceptual business entities and logical Data Vault models directly in the platform.

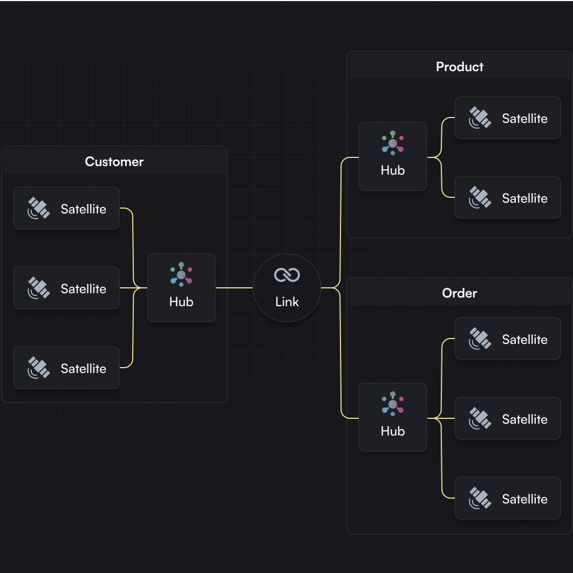

Automatically generate consistent and version-controlled physical models.Full automation of all core Data Vault 2.0 object types:

Hubs, Links, Satellites, including Effectivity, Status, and Multi-active satellites, Point-in-Time (PIT) tables, and Non-historized Links.Simply map sources to targets—ADE auto-generates optimized SQL transformation scripts.

Dependency management is built-in, ensuring reliable and repeatable data flows.Use built-in data model and load templates or configure your own reusable components to fit enterprise standards.

Enforce consistency in the data team while allowing for customization.Agile Data Engine’s smart delta processing logic reduces rework and enhances performance for incremental loads.

Built-in CI/CD pipeline optimized for data warehouse enables iterative, “just-in-time” delivery of Data Vault structures.

Schema change automation means faster time-to-value and better reliability without disrupting existing processes.A centralized metadata repository gives your team full visibility from source systems to raw vault and business vault layers.

Audit-ready lineage and traceability at every step.ADE supports Data Vault implementation with all major cloud database platforms like Databricks, Snowflake, Microsoft Fabric and Google BigQuery.

All data vault key features are built in—no third-party plugins or custom workarounds required.