Make the Most of Your Cloud Data Warehouse

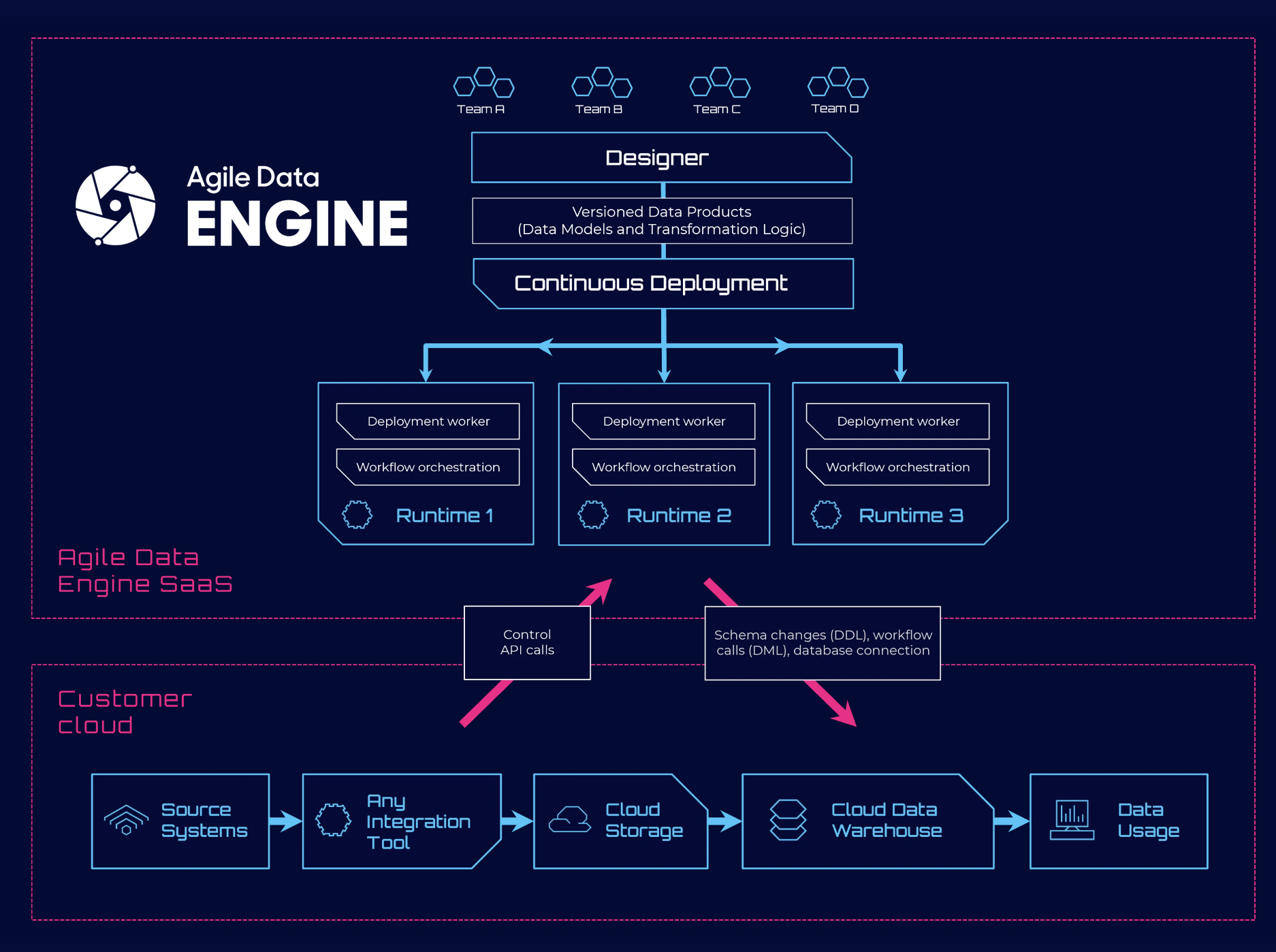

Agile Data Engine is a DataOps Platform used for building, deploying, and running cloud data warehouses. It operates and orchestrates data loads from your preferred cloud storage to your target database.

Agile Data Engine is a DataOps Platform used for building, deploying, and running cloud data warehouses. It operates and orchestrates data loads from your preferred cloud storage to your target database.

Agile Data Engine's SaaS DataOps platform forms a solid foundation for your data warehouse so you can focus on building business value on top of it, rather than keeping it on life support.

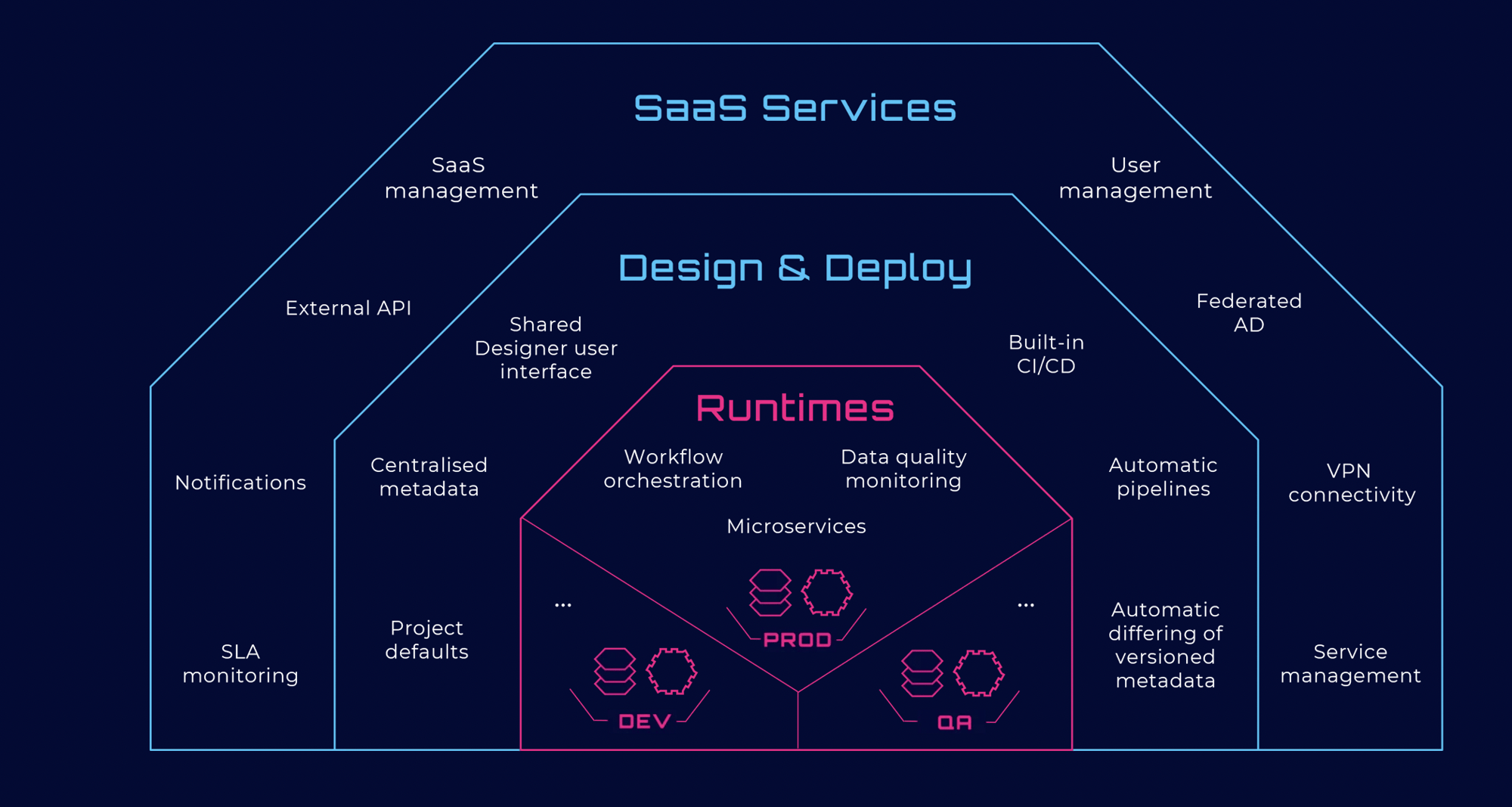

Agile Data Engine is the only platform offering all of these straight out of the box:

Design data models and data load definitions such as transformations, business rules, and dependencies for the data platform.

Out of the box continuous delivery workflow with built-in CI/CD pipelines with automatic schema changes.

Load your data to cloud database using metadata-based intelligent workflow generation, testing, orchestration, and monitoring.

Easy-to-use metadata interfacing for operationalization and integration with existing systems and processes.

Workflow monitoring and timely data quality tests with integration possibility to customer backend systems.

Visibility and Insights on development and operations for data teams, including DataOps management KPIs and Data Product Catalogue.

Agile Data Engine's metadata-driven approach has three core concepts:

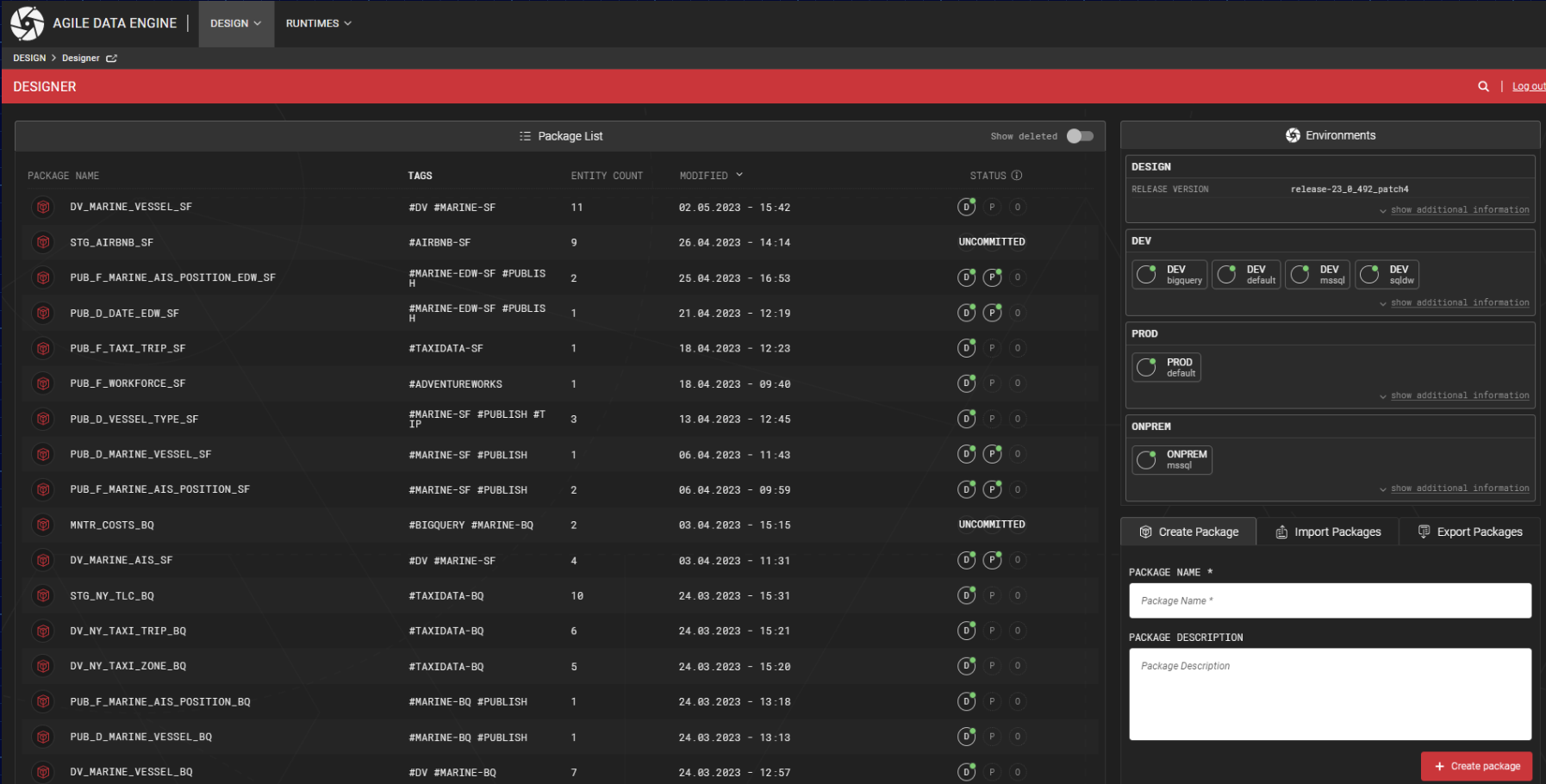

Everything is designed in the same web-based user interface and an environment shared by all developers and teams working with your data warehouse.

The data models, load definitions, and other information about the data warehouse content are stored in a central metadata repository with related documentation.

The actual physical SQL code for models and loads is generated automatically based on the design metadata. Also, load workflows are generated dynamically using dependencies and schedule information provided by the developers.

More complex custom SQL transformations are placed as Load Steps as part of metadata, to gain from the overall automation and shared design experience.

We can't speak for our customers, but we can tell you our customer satisfaction rating exceeds 97%. Hear it from them:

DataOps and data in general are ever evolving topics. Head over to the blog to stay up-to-date

A look at how Agile Data Engine and Tampere University teamed up to bring hands-on data warehousing training to future data professionals.

ADE Summit 2025 recap: Key insights on scaling data value, platform sustainability, and AI-ready growth. From data management to business impact.

Agile Data Engine CEO Matti Karell shares how ADE is moving from foundation to AI-powered mainstream with automation, scale, and a Databricks partnership.

Whether you're just getting started with a data transformation, are working to move from on-prem to cloud, or are curious to hear how we can help save you millions and grow your data warehouse's lifetime value, we're happy to chat!

Join our growing community of data enthusiasts.